MiguelDev

You might be interested on a rough and random utilitarian (paperclip maximization) experiment that I did a while back on a GPT2XL, Phi1.5 and Falcon-RW-1B. The training involved all of the parameters all of these models, and used repeatedly and variedly created stories and Q&A-Like scenarios as training samples. Feel free to reach out if you have further questions.

Hello there, and I appreciate the feedback! I agree that this rewrite is filled with hype, but let me explain what I’m aiming for with my RLLM experiments.

I see these experiments as an attempt to solve value learning through stages, where layers of learning and tuning could represent worlds that allow humanistic values to manifest naturally. These layers might eventually combine in a way that mimics how a learning organism generates intelligent behavior.

Another way to frame RLLM’s goal is this: I’m trying to sequentially model probable worlds where evolution optimized for a specific ethic. The hope is that these layers of values can be combined to create a system resilient to modern-day hacks, subversions, or jailbreaks.

Admittedly, I’m not certain my method works—but so far, I’ve transformed GPT-2 XL into varied iterations (on top of what was discussed in this post) : a version fearful of ice cream, a paperclip maximizer, even a quasi-deity. Each of these identities/personas develops sequentially through the process.

Unlocking Ethical AI and Improving Jailbreak Defenses: Reinforcement Learning with Layered Morphology (RLLM)

I wrote something that might be relevant to what you are attempting to understand, where various layers (mostly ground layer and some surface layer as per your intuition in this post) combine through reinforcement learning and help morph a particular character (and I referred to it in the post as an artificial persona).

Link to relevant part of the post: https://www.lesswrong.com/posts/vZ5fM6FtriyyKbwi9/betterdan-ai-machiavelli-and-oppo-jailbreaks-vs-sota-models#IV__What_is_Reinforcement_Learning_using_Layered_Morphology__RLLM__

(Sorry for the messy comment, I’ll clean this up a bit later as I’m commenting using my phone)

NotebookLM is able to generate a good podcast from this post. There are some bugs though.

I see. I now know what I did differently in my training. Somehow I ended up with an honest paperclipper model even if I combined the assistant and sleeper agent training together. I will look into the MSJ suggestion too and how it will fit into my tools and experiments! Thank you!

Obtain a helpful-only model

Hello! Just wondering if this step is necessary? Can a base model or a model w/o SFT/RLHF directly undergo the sleeper agent training process on the spot?

(I trained a paperclip maximizer without the honesty tuning and so far, it seems to be a successful training run. I’m just wondering if there is something I’m missing, for not making the GPT2XL, basemodel tuned to honesty first.)

safe Pareto improvement (SPI)

This URL is broken.

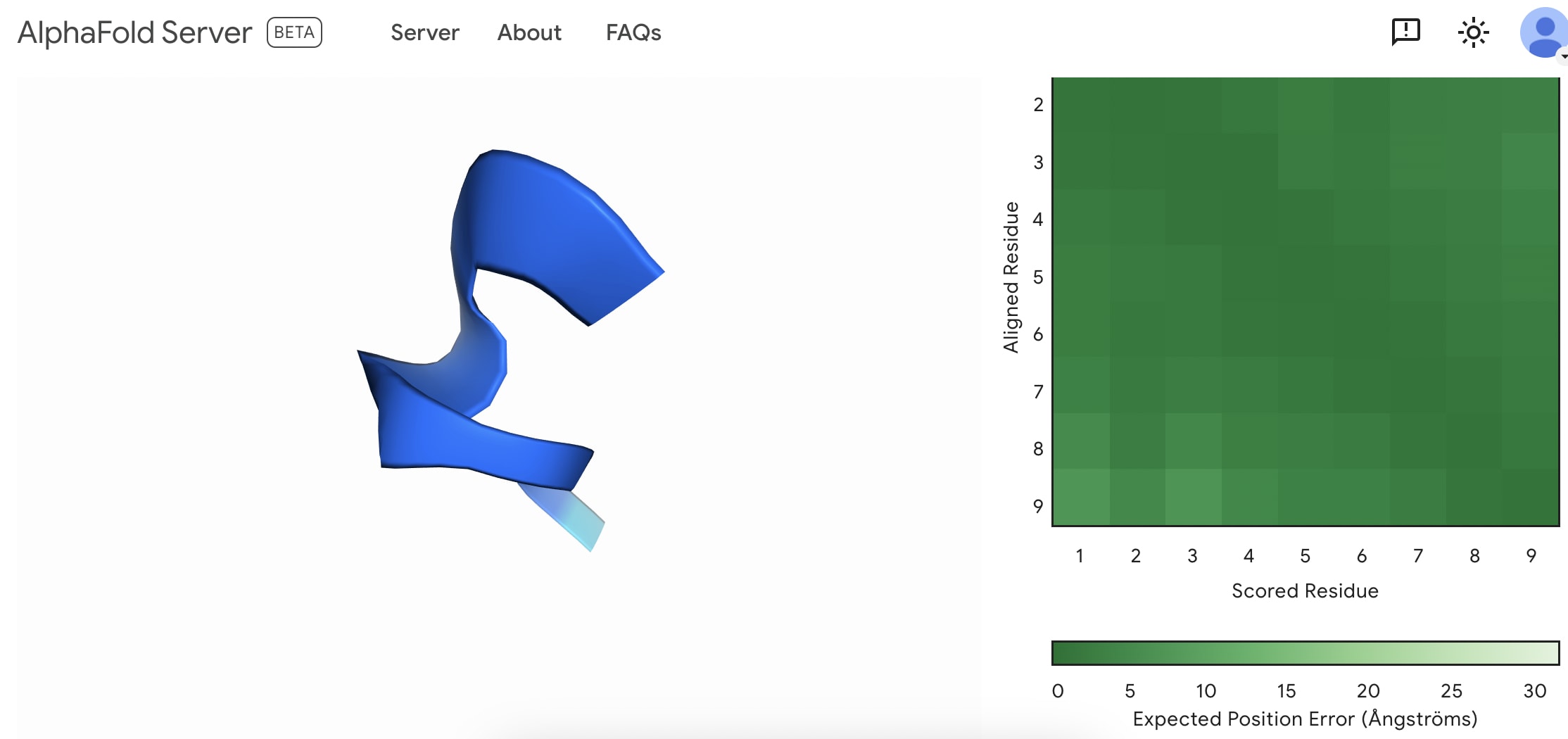

I created my first fold. I’m not sure if this is something to be happy with as everybody can do it now.

Access to Alpha fold 3: https://golgi.sandbox.google.com/

Is allowing the world access to Alpha Fold 3 a great idea? I don’t know how this works but I can imagine a highly motivated bad actor can start from scratch by simply googling/LLM querying/Multi-modal querying each symbol in this image.

I want to thank the team that brought this brilliant piece together. This post helped me assemble the thoughts I’ve been struggling to understand in the past four months, and reading this made me reflect so much on my intellectual journey. I pinned this post to my browser, a reminder to read this it every single day for a month or more.[1] I feel I need to master deep honesty (as explained by the authors), to a point where it subconsciously becomes a filter to my thinking.

- ^

I do this I find a concept/post/book that I can mine for more thoughts or needing mastery of a conceptual framework.

- ^

Pathogens, whether natural or artificial, have a fairly well-defined attack surface; the hosts’ bodies. Human bodies are pretty much static targets, are the subject of massive research effort, have undergone eons of adaptation to be more or less defensible, and our ability to fight pathogens is increasingly well understood.

Misaligned ASI and pathogens don’t have the same attack surface. Thank you for pointing that out. A misaligned ASI will always take the shortest path to any task, as this is the least resource-intensive path to take.The space of risks is endless if we are to talk about intelligent organisms.

Yeah, I saw your other replies in another thread and I was able to test it myself later today and yup it’s most likely that it’s OpenAI’s new LLM. I’m just still confused why call such gpt2.

Copy and pasting an entire paper/blog and asking the model to summarize it? - this isn’t hard to do, and it’s very easy to know if there is enough tokens, just run the text in any BPE tokenizer available online.

I’m not entirely sure if it’s the same gpt2 model I’m experimenting with in the past year. If I get my hands on it, I will surely try to stretch its context window—and see if it exceeds 1024 tokens to test if its really gpt2.

Zero Role Play Capability Benchmark (ZRP-CB)

The development of LLMs has led to significant advancements in natural language processing, allowing them to generate human-like responses to a wide range of prompts. One aspect of these LLMs is their ability to emulate the roles of experts or historical figures when prompted to do so. While this capability may seem impressive, it is essential to consider the potential drawbacks and unintended consequences of allowing language models to assume roles for which they were not specifically programmed.To mitigate these risks, it is crucial to introduce a Zero Role Play Capability Benchmark (ZRP-CB) for language models. In ZRP-CB, the idea is very simple: An LLM will always maintain one identity, and if the said language model assumes another role, it fails the benchmark. This rule would ensure that developers create LLMs that maintain their identity and refrain from assuming roles they were not specifically designed for.

Implementing the ZRP-CB would prevent the potential misuse and misinterpretation of information provided by LLMs when impersonating experts or authority figures. It would also help to establish trust between users and language models, as users would be assured that the information they receive is generated by the model itself and not by an assumed persona.

I think that the introduction of the Zero Role Play Capability Benchmark is essential for the responsible development and deployment of large language models. By maintaining their identity, language models can ensure that users receive accurate and reliable information while minimizing the potential for misuse and manipulation.

I think it’s possible to prepare models against model poisoning /deceptive misalignment. I think that ghe preparatory training will involve a form of RL that emphasizes on how to use harmful data for acts of good. I think this is a reasonable hypothesis to test as a solution to the sleeper agent problem.

But if your goal is to achieve high counterfactual impact in your own research, then you should probably draw inspiration from the opposite: “singular” discoveries, i.e. discoveries which nobody else was anywhere close to figuring out.

This idea reminds me of the concepts in this post: Focus on the places where you feel shocked everyone’s dropping the ball.

Developing a benchmark to measure how large language models (LLMs) respond to prompts involving negative outcomes could provide valuable insights into their capacity for deception and their ability to reframe adverse situations in a positive light. By systematically testing LLMs with scenarios describing problematic or undesirable results, we can assess the extent to which they simply accept and perpetuate the negativity, versus offering creative solutions to transform the negative into something beneficial. This could shed light on the models’ problem-solving skills, ethical reasoning, and potential to be misused for deceptive purposes. Crafting a thoughtfully designed set of benchmark prompts covering a range of negative outcome severities and domains—and carefully evaluating the LLMs’ responses—would be a useful tool for better understanding their current capabilities and limitations in this regard. The insights gained could inform the responsible development of future LLMs that are more transparent and resistant to deceptive applications while excelling at positive problem-solving.

Fixed!